Quick tip for Time Machine backups: Mac OS X Server ("Server.app" these days) can setup a quota for Time Machine backups that is specific per-machine (rather than per-user). This trick works on any share, actually, since it's a root-owned property list in the share directory. You'll want Mavericks or later as a client for this to work, but to set it up cd into the root of the Time Machine share and create the plist with (for a 500GB example):

/usr/libexec/PlistBuddy -c 'Set :GlobalQuota 500000000000' .com.apple.TimeMachine.quota.plist

On the server, make that owned by root:wheel and chmod 644 et voila! You have quotas. No need for "real" quotas, LVM, or other fun. Works over SMB and AFP.

After watching yesterday's Apple Event and reading around a bit at the reactions, I've become concerned for the future of the Mac, at least in the hands of the current leadership at Apple.

For a long time we — creatives, power users, and developers, the "Pro" in the product names — felt the fear that Apple's success in iOS would manifest itself with a locked-down Mac and candy icons on the screen. While that does not appear to have passed, something far more damaging has: Apple completely forgot why people used Macs.

There's a Tim quote that's seen a resurgence in the past day:

“I think if you’re looking at a PC, why would you buy a PC anymore? No really, why would you buy one?”

Tim Cook, talking about the iPad Pro (from Charged Tech)

That one quote speaks volumes. Perhaps it wasn't meant to, in fairness. Perhaps it was off-the-cuff and meant only to say the iPad Pro was a lovely little tablet and it solved the needs of all the casual users in the world. But that the thought was there to be spoken also says that it's been said a lot internally, in one way or another.

It seems to me that Apple's distinction between the Mac and iOS is purely about how we interact with the device — a chair and keyboard/pointer versus lounging and casual poking around — instead of realizing there's a fundamental reason to why there are different interaction patterns. They appear to have only a topical understanding of why people use Macs instead of the deep understanding of their customer base that was once apparent in the design.

Four years. It's been four years since Apple last significantly updated the MacBook Pro. I own that machine and I'm typing on it now (Early 2012 R-MBP). What's really shocking about this machine is that it still stands up to machines today. It is powerful and progressive and a statement about future-proofing portable computers. It's thin, but not so thin it does nothing. It's powerful, but not so powerful it doesn't work most of the day. It's light, but not so light that it's missing significant features.

However, even it missed some marks. As a part of Apple's new serial port-murdering mentality the ethernet port was removed from the computer. At the time I recall hearing someone in the presentation say: "When was the last time you used wired networking? Wireless is the future." Wireless is the future, yes. The problem with the future is that it's not the present, and by removing a port from a single product the world will not change overnight. Corporations will not change their massive network deployments and port-level security. Homes will not suddenly be covered in wireless in every corner. Hotels will not suddenly have reliable and secure wireless networks. Even today I wind up using wired ethernet daily in some fashion.

It was a mistake, and not the only one. That model also hard-wired the RAM to the motherboard, switched to PCIe storage, and sealed the computer. The previous model was delightfully expandable with just a small tug on a lever to expose the battery, 2.5" SATA bay, and the SO-DIMMs used for RAM. Gone. No upgrades. Buy today what you'll want in five years and hope the battery lasts. The iMac and Mac Mini were hit with similar reductions in expandability (though they kept their ethernet ports).

In short order new MacBooks lost just about every other port on the machine and they even toyed with the idea of just one port, merging power and connectivity. (Think about that one, because they did it again with the iPhone 7 recently.) They removed all connectivity from the MacBook when it was being used at a desk and then all ability to charge when it was in active use with an external device that needed power. If I were in the room in a position of power when that was suggested I'd have one more junior whatever on staff in short order. It's a very rare lifestyle that never, ever uses a computer at a desk without anything connected to the machine. Of course, there was a solution to it. You guessed it, a dongle that accepted power and exposed a USB-C port. The same idea they used on the iPhone 7. People hate it.

Now we come to today. Four years after the previous mostly successful redesign (I'm still using my Thunderbolt-Ethernet dongle). What got announced? All ports are going away and being replaced with a connectivity standard that very few first-parties are actually using. For just about everything you need to do with your computer, you're going to have to live a life of dongles. Whatever port you were using and relying on is dead. No peripheral you currently own can be attached without a dongle.

Do you use an external display with Mini-DisplayPort? Dongle.

Do you use an external display, projector, or TV with HDMI? Dongle.

How about an external hard drive, thumb drive, keyboard, mouse? Dongle. Dongle. Dongle. Dongle.

Hey, do you have an iPhone or iPad? Dongle! Or purchase the USB-C to Lightning cable that nothing comes with right now. $25, please.

You know, that's enough dongles that maybe you just want one super-dongle to rule them all. Well, those exist as well.

(By the way, none of those support power delivery to the tune of 87W, so they can't charge the new MacBook Pro. The OWC one comes close at 80W, but is still a bit shy. I would not pre-order those with your new MacBook Pro USB or you'll be very disappointed and have to plug in two USB-C cables.)

What this means, dear reader, is that if you are one of The Forgotten then you have to drop another $200-400 to purchase all the ports that Apple removed. It's like system building with expensive and wobbly LEGO bricks, and has all the appeal of that phrase.

If this were a situation where you'd buy one or two of them and be done with it, it might be okay. Might. But this isn't that case. You'll need a USB-A adapter (or three), a video adapter of some kind (unless you drop $2K on the 5K LG display), perhaps a wired ethernet adapter (always have one to be safe), and an SD card reader if you're a photog (BTW, get another USB-A adapter and leave it plugged in to that thing). By the way, if you ever have to load photos from a card, save them to an external disk, preview them on an external display, and then upload them over a wired connection (not too far from a real "Pro" workflow) then you aren't charging your Mac while you do this as you just used all four ports for dongles.

Apple thinks their Pro users are Pro Video users. Users who use Final Cut Pro (though many/most have moved away after the Final Cut Pro X fiasco) and need desktop RAIDs and large displays … and that's it, save, perhaps, an audio user or two. Folks like me who have been using computers for many decades and connect all kinds of things to them are generally left out.

However even common office workers are left out to a degree (ethernet! video!). Apple wants us to move to a dock-and-run lifestyle but they are unwilling to make the dock or make it easier to run. They used to make a dock but then they killed it with no replacement, instead pointing users to a third-party display. Even then, another $2K for those missing ports is obscene. The reason, of course, is that they bundled them with a screen.

Don't do that. Don't ever do that. If you see that, don't ever buy that. You've just entangled the lifespan of your display to the lifespan of the specific ports that it supports. If 10g enet somehow becomes a household standard then you're out of luck. USB 4? Nope. Thunderbolt 4? You're stuck, even if that display is still wonderful and otherwise useful. (I'm saying this as I look at the disconnected Thunderbolt Display sitting on my floor because it's utterly useless on anything except my Mac. I use three desktop platforms and have limited desk space so everyone shares one display. HDMI KVM FTW. At least, until yesterday.)

But it's thinner. It's using Skylake (not Kaby Lake, mind you). It's powerful and wonderful and does magical OLEDy things.\ We can forgive them these slights because they did them in pursuit of thin and sexy, right?

Except that thin and sexy has been done without removing ports, fully utilizing Thunderbolt 3 and USB 3, and even incorporating a nearly desktop-class GPU. Have you seen the Razor Blade Pro yet? You should, especially if you're OS-flexible. Even the Razor Blade Stealth managed to pull it off better than the MacBook Pro 13" did (still has USB-C, USB-A, and HDMI). Both include high-resolution wide-color displays (different gamut, but still wide-color). Both are actual touchscreen computers as well. They didn't even have to murder the Escape or Function keys to get it. (I'm in the CLI all day so that hurt, but I know I'm a rarity on that one)

They made a reference to the original PowerBook in the show yesterday. It actually made me sad to see it because the PowerBook in general was so much more of a computer than the toy they still today. It was so much more expandable and versatile than the machines they sell today — especially during the Wall Street and Pismo years. Need two batteries? Do it. Need a battery and an extra drive? Do it. Need two drives and you're on wall current? Do it. Today? Can't do it, use another dongle. Out of ports? There's a dongle for that so you can add more dongles (a USB hub).

I can't help but feel Apple has decided the core audience of their Unix-based powerhouse OS is the latte-sipping children in campus coffee shops and anything at all about their systems that appeals to anyone else is just something to be removed in the path to a sheet of paper with nothing but content. Frankly, it's that total disconnect between what computer users want and what mobile users want that has me worried about the Mac. The source of my fear — after much contemplation — is that the same people that design the Mac are designing the iOS devices, and that's a horrible situation for both platforms.

Related reading:

Having the desire to upgrade my input devices at the home, I started looking around for a good keyboard and mouse combo. While the business-oriented lines were nice in their own ways, they lacked a certain flair and were woefully short of buttons and standard layouts. (What's with everyone screwing with the standard keyboard layout? Stop it. I like my buttons.)

As a result, I started to look at the gaming series of devices. I'm not sure how I wound up looking at them, honestly, but once I started to look at the options it was clear to me that all the attention on making input devices better at a hardware level was going into that market instead: the keyboards were mostly mechanical, the mice were high-DPI and loaded with buttons, and the quality was far and away higher — as were the prices, of course.

After some period of research I picked up the Corsair K70 keyboard and Corsair M65 mouse. Neither is too gratuitous with the lights off, and both are quite helpful if you setup the lights accordingly. By which I mean: think back to the 80s and keyboard overlays for Lotus. Like that, but with colors. So when switching to a game you can have the lights come up for the keys you normally use and then color-code them into groups (movement, actions, macros, etc.).

When using it for daily stuff in Windows, and some games, it proved quite the nice combo. The mouse has buttons to raise and lower the DPI and a quick-change button at the thumb for ultra-precise movement (think snipers in an FPS game or clicking on a link on an overly-designed web page where the fonts are 8pt ultra-lights — yes, I really used it for that once).

However, I quickly found the Corsairs had a very large weakness: they literally only worked in Windows. I don't mean the customization and macros, I mean the devices themselves did not show up as USB HID devices in Linux or the Mac. They failed to be a keyboard and mouse on every other computer I have. Normally, I would blame this on my KVM's USB emulation layer, but I connected them directly and nothing changed. That is, until I read the manual and discovered the K70 had a switch in the back to toggle "BIOS mode". Now it worked, but I lost some customization and the scroll lock flashed constantly to tell me I wasn't getting my 1ms response time anymore (no, can't turn that off — flashes forever).

To add to the fun, the keyboard has a forked cable. One USB plug is for power and one is for data. If you connect the data cable to a USB 3 port on the computer itself then it can get the power it needs and you don't need the other. If you use a KVM or USB 2 hub then you're using both.

Overall, the frustrations outweighed the utility and I returned them both. I did some more research and found that, of all companies, Logitech fully supported their gaming devices on both Mac and Windows and their devices started in USB HID mode and only gained the fancy features when the software was installed on the host machine.

Taking that into consideration I went ahead and picked up the G810 Orion Spectrum keyboard and the G502 Proteus Spectrum mouse.

To summarize the differences:

- Both keyboard and mouse work well on Windows, Mac, and Linux.

- Logitech's gaming software works well on Windows and Mac. (On the Mac, be sure not to Quit it and only close the window when done. Otherwise the driver closes and the lights go back to the default. It will remove itself from the dock and live in the menu bar when you close the window.)

- The mouse works on the mouse port of the KVM, but the keyboard needs the raw USB port no matter what. In the world of compromises, I can live with that.

- The keyboard does not have the immense lighting customizations that the Corsair does. I, however, consider the lighting sufficiently helpful and I don't need my audio's spectrum dancing across my fingers as I work/play.

- The keyboard does use Logitech's custom Romer-G switches instead of the Cherry MX Browns in the K70. Frankly, while there is a difference, it's not a difference for either good or ill. It's perfectly fine and just as enjoyable.

I'm especially happy that both keyboards had a dedicated button for turning the lights off when I just wanted a good mechanical keyboard and back on when I want to do something that it adds value to. That's a nice selling point for both, really.

At any rate, if you have a Mac, it appears only Logitech still cares about you. That's perfectly fine with me as they make some good stuff, overall.

… it could always be Mac OS 9.

If you're having trouble getting Doxygen to parse NS_ENUM statements, here's the config file magic:

ENABLE_PREPROCESSING = YES

MACRO_EXPANSION = YES

PREDEFINED = "NS_ENUM(_type, _name)=enum _name : _type"

You may or may not want to enable EXPAND_ONLY_PREDEF to only expand that one macro.

Swift & the Objective-C Runtime:

Even when written without a single line of Objective-C code, every Swift app executes inside the Objective-C runtime, opening up a world of dynamic dispatch and associated runtime manipulation

With an opener like that, how can you not read (and be both slightly horrified and optimistic)?

High Caffeine Content — MPW, Carbon and building Classic Mac OS apps in OS...:

What interested me the most is how so much of the API remained identical - I was still using only functions that existed on System 1.0 in my app, but they were working just the same as ever in a Carbonized version. The single built binary ran on OS 8.1 all the way to 10.6 (care of Rosetta).

My mind wandered to Carbon as it exists in 10.10. While Apple decided not to port it to 64-bit (for all the right reasons), the 32-bit version of Carbon is still here in the latest release of OS X - I wondered how much of it was intact.

Turns out the answer is: all of it.

Yosemite and Default URL Handlers – Edovia Blog:

Unfortunately, Apple is now blocking sandboxed apps to change the default handler for a particular URL scheme. This is why Screens is not able to set Screen Sharing back as the default handler. This change affects a whole bunch of apps that use to rely on this functionality.

The sandboxing rules on OS X have always been a source of difficulty, as some cautioned early on. At the time I felt that Apple would see the harsh edges and work something out with developers so that more useful apps could be in the MAS over time. I thought that perhaps they’d sort it out to the level that even top-tier folks would manage to get their apps in there (think Photoshop or Office).

What I didn’t consider, and I don’t think anyone really had a reason to at the time, is that Apple might tighten the requirements over time and try to turn OS X’s sandbox — ever so slowly — into iOS’ sandbox. With every release there’s one more thing that can’t be accessed, one more app Apple kicks out, and one more developer walking out because of the whole mess (be it sandboxing, the review process, generally poor communication, or whatever else).

As a result, the MAS is full of ported games, uselessly simplistic productivity tools, “system utilities” that do absolutely nothing useful in the first place and are only differentiated by their appearance, and some apps that manage to hang on and haven’t done anything to awaken the Beast … yet.

I think Apple’s forgotten what a desktop computer is used for in the real world at this point. When Lion came out, I stayed with Snow Leopard because I saw it as the iOS-ification of the Mac. When Mavericks came out, I was convinced that’s what was happening. Honestly, I only upgraded my OS because I upgraded my Mac and now I just hope the next version will fix something, anything that the previous ones have taken away from the Mac.

Time and again, Apple tells us that it has no idea what the Mac is for anymore, other than coding for iOS and using Mail and Safari on a large screen. If there’s something you can do on a Mac that isn’t related, then it’s time to sandbox it.

Time will, of course, move forward. The sandbox will get tighter and tighter. More and more developers will leave it. In the end, Apple will have ruined the one thing that could been amazing on the Mac: a thriving community of independent software makers sharing their work. Instead, they’ll have an abundance of two-year-old Me-Too games, far-too-minimal general purpose apps, and lots and lots of useless trash.

Worse? People who see the MAS will think that’s all you can do on a Mac. And then leave.

See also:

PS: Before it’s said, yes, this would have happened under Steve. He’s the one that started it, after all. This is what a walled garden does: it keeps everyone out.

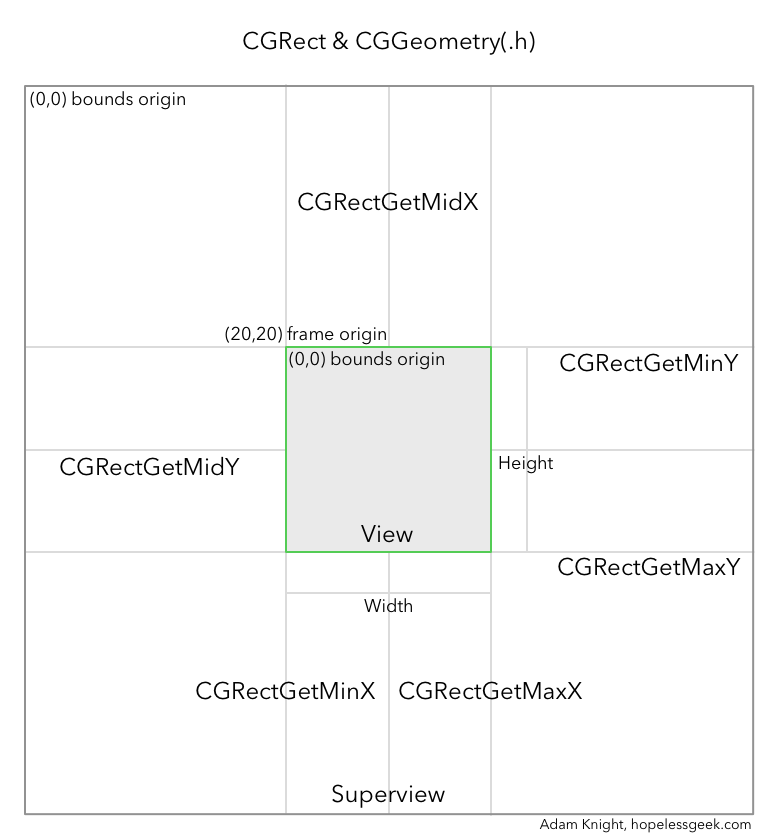

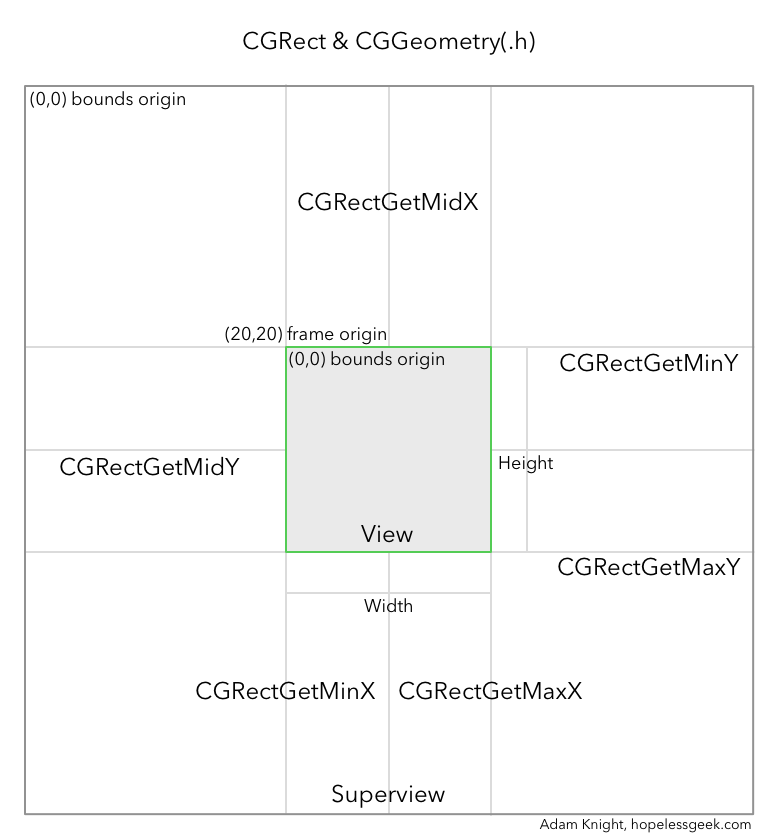

The best description of how Cocoa's views are designed that I could find from Apple is in the Cocoa Views Guide PDF in the chapter called View Geometry. If you're trying to get your head around what a CGRect is and why self.view.frame.origin.x++ doesn't work, that's required reading. (A hint to the latter: view.frame is return-by-value; CGRect is a struct, self is an object.)

That said, here's a handy graphic to not only help you get your head around when to use frame and when to use bounds, but also what they mean in the grand scale of things. Also included are some visual guides on when to use some handy CGGeometry.h functions to do some work for you.

Caveat emptor: The above describes the iOS coordinate system, which is technically inverted. Mac OS X's origins are at the lower left (Cartesian quadrant 1) so while all the X-related items are correct, in Mac OS X the Y-related items would be reversed with CGRectGetMinY at the bottom.

Examples

How about some examples? There are also two more functions that are day-savers here. These take in a rect and then spit out a modified rect. Because they act on copies (pass-by-value) you can input one rect and output it somewhere else. How about setting a new view to be a 10pt inset from the current bounds? Easy.

(CGRect) CGRectOffset(rect, dx, dy)

Moves the origin of the rect by the delta X and Y.

(CGRect) CGRectInset(rect, dx, dy)

Resizes a rect in-place (maintaining the center point) by the delta X and Y. Positive deltas shrink the rect, negative values grow it.

Example: Move a view up or down by y.

view.frame = CGRectOffset(view.frame, 0, y);

Example: Expand a view by 5.0 in three directions (not down).

view.frame = CGRectInset(view.frame, -10.0, -5.0);

view.frame = CGRectOffset(view.frame, 0, -5.0);

Example: Is a view's origin on the top half of its superview?

BOOL wellIsIt = (CGRectGetMinY(view.frame) < CGRectGetMidY(view.superview.frame))

Example: Drag a view around in a UIPanGestureRecognizer callback.

Here’s a fun one. Note that you could also pass the resultant point into a transform so that failed drags could simply be an animated reset to an identity transform, but that’s no fun. Keep the original rect around somewhere and then just move it.

if (

[gestureRecognizer state] == UIGestureRecognizerStateBegan ||

[gestureRecognizer state] == UIGestureRecognizerStateChanged) {

CGPoint point = [gestureRecognizer translationInView:self.view];

floatyView.frame = CGRectOffset(floatyView.frame, point.x, point.y);

[gestureRecognizer setTranslation:CGPointZero inView:self.view];

}

Exercise: Manually convert a point from one coordinate system to another.

This is fun. (For varying degrees of fun.) A point is just an x/y pair; it has no knowledge of from whence it came. So (10,10) to your view is really (38,17) to your superview or (40,17) to its superview. To live in this hellhole of a world, we typically exploit NSView or UIView and tell them to sort it out like so:

[self.view convertPoint:point fromView:someSubview]

But, where’s the fun in that? More importantly: do you know what’s happening?

I’ll let you work on this on your own. You can always get the right answer with the -convertPoint:fromView: method so you know the goal to reach. Here’s a hint: figure out if the fromView is an ancestor or descendant to you and then crawl the chain of subviews up from the lowest view until you reach the other, calculating the total offset from the frame of each, then apply that offset to the point.

Moving a whole rect is similar, but you’re just setting the origin point.

I love this stuff. :)